Overview

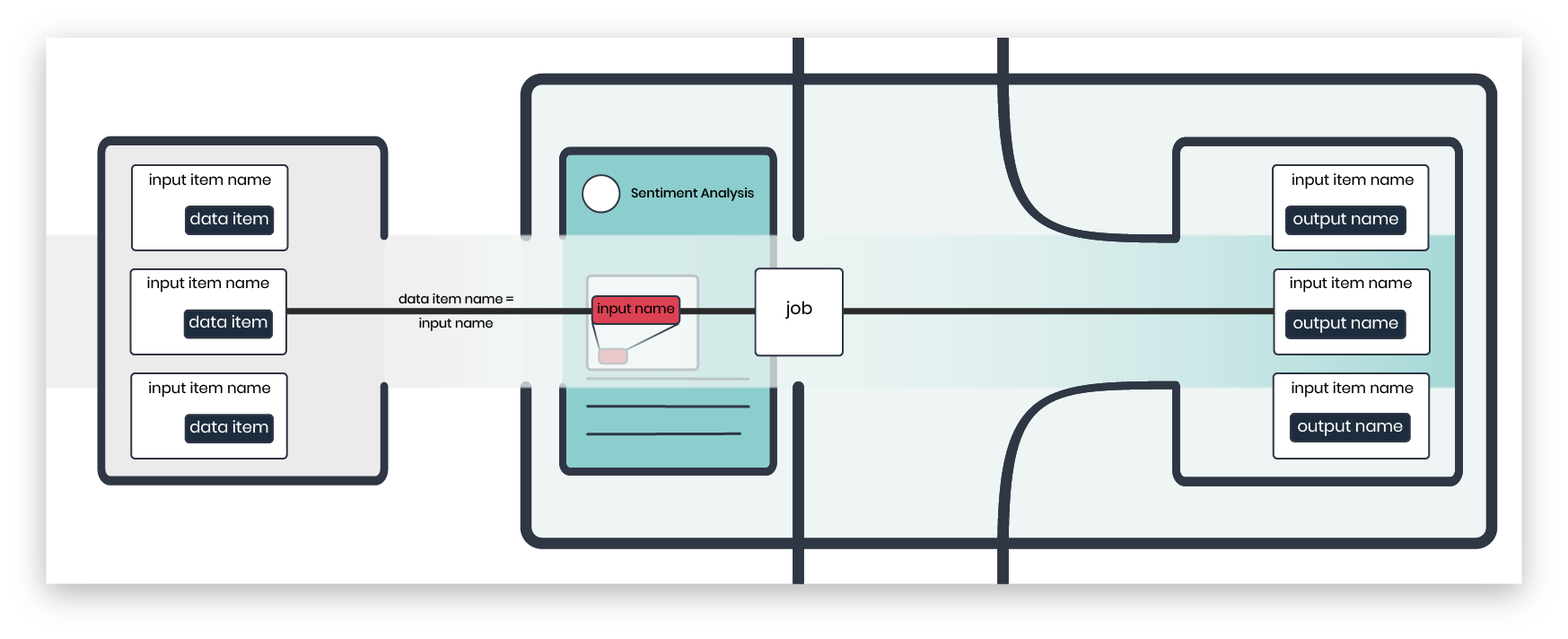

A job is a process that sends data to a model, sets the model to run the data, and returns results. Jobs can process multiple input items and multiple data files. The job lifecycle starts when a job is submitted and ends when a job run is complete. While input items get completed results become available. In the meantime, the job details route tells about the job lifecycle’s progress.

Several factors determine the job completion speed. These include the input size, number of input items and data files, the model, the memory and hardware available to run the model, and the API key’s priority level. Model readiness and autoscaling settings also affect the job completion speed. Modzy provides the capability to manage readiness and autoscaling settings for each model-version.

Job lifecycle at the job level.

Job lifecycle at the input level.

Job requests

To submit a job request, send the inputs, model identifier, and version number to execute. Modzy returns a job identifier, required to check the job’s progress and retrieve results.

Input types

Input types are declared upon job request. Modzy supports several input types such as text; embedded for Base64 strings; aws-s3, azureblob, storage-grid and aws-s3-folder for inputs hosted in buckets, and jdbc for inputs stored in databases. Models may accept only some input types. As a good practice, validate your chosen input types before submitting a job.

Input file types

Models accept specific input file MIME types. Some models may require multiple input file types to run data accordingly.

Accepted MIME types.

Input item names

Model’s input item name

Models require each input to have a name declared in the job request. This name is the model’s input name and can be found in the model’s details.

User’s input item name

Additionally, users can set their own input names. When multiple input items are processed in a job, these names are helpful to identify and get each input’s results.

Multiple input items.

Multiple data files

The aws-s3-folder a directory with multiple data files. Set the path to a directory that stores multiple data files as needed.

Call the model details route or visit the Marketplace to find specific model input requirements. Visit the jobs reference to learn more about inputs.

Job progress

To check a job’s progress call the job details route. If multiple input items are submitted and the job is still in progress, some items may be fully processed and have their results available. This route lets you know the status of a job and which items are completely run, in progress, or pending. It also lets you know if there are any items that failed to be processed. Once the job is complete, all the processed results are available and the job’s status is set to Completed.

Job status

The job status explains the model’s execution. Jobs start off as Submitted and move to In Progress when they begin to run. A job transitions to Timedout if a timeout is set and expires. Once finished, the status is set as Completed or Error. If a user cancels a job before it is finished, the status is set as Canceled.

| Job status: | |

|---|---|

| SUBMITTED | The job is in the queue. |

| TIMEDOUT | The job’s timeout expired before run completion. |

| IN_PROGRESS | The job is running. Partial results may be available. |

| COMPLETED | The job is finished and at least one input was processed successfully. |

| CANCELED | A user canceled the job. |

| ERROR | The job ran partially and failed. |

Queues

Modzy sends Submitted jobs to queues. Job inputs line up by age in one queue per model. Every ten seconds, Modzy validates if there are more queues than available engines, and:

- if the available engines > number of queues: Modzy distributes available engines to all models, proportionally to the queue sizes, based on the number of inputs;

- if the available engines < number of queues: Modzy runs the jobs for models with older inputs first, based on the job timestamps.

Processing engines

From the queue, inputs are sent to and run by processing engines. Model-versions may be set to run with multiple processing engines. In this case, serial queues and multiple model-version instances are created and run inputs in parallel.

Timeouts

Timeouts define the maximum allowed time to run a processing engine. Both models and jobs have timeout settings.

Model timeouts

Each model has its own timeouts defined:

The model’s timeout status is the maximum allowed time for a model to load and be ready to run data.

The model’s timeout run is the maximum allowed time to execute inference on each input item. It starts when a single input item is loaded. If the timeout is met while an input item is running, the inference for that input is canceled.

Check out model details to learn about the timeout values for any given model

Job timeout

The job timeout can be set upon job request. Otherwise, it combines the model timeouts with the number of pending inputs for that model.

Job results

To get your results call the retrieve results route. Results are available per input and can be identified with the name provided for each input item upon job request. You can also add an input name to the route and limit the results to any given input item.

Results are organized under input item names.

Jobs requested for multiple input items may have partial results available prior to job completion. Items get fully processed and can be seen in the job details route while the job continues to run. Call the retrieve results route to retrieve specific information for an input processing including status, startTime, endTime, updateTime, elapsedTime, and assigned engine.

Explainability

Models with the explainability feature return JSON outputs with mask values for explainable results. Mask values include the prediction results and the explainability results (pixel values that motivate the prediction made by the model).

Input status

Input status is about what happens with a specific input processing. Inputs start off as FETCHING_DATA and move to PROCESSING. They can then transition to FAILED or SUCCESSFUL.

| Input status: | |

|---|---|

| FETCHING_DATA | The input item is retrieved. |

| PROCESSING | A pod is assigned to the input for processing. |

| FAILED | The input processing failed. |

| SUCCESSFUL | The input was processed successfully. |